|

Greetings! I am Chunhui Zhang (pronounced Ch'un-hui Chang / 張春晖). I am a CS Ph.D. candidate at Dartmouth 🌲, where I am advised by 🌟 Prof. Soroush Vosoughi. Previously, I was a research intern at Google DeepMind, Mountain View, CA, exploring SFT and RL on Gemma 3n. I also interned at Amazon, where I trained computer-use agents for GUI tasks. / / / / CV / Google Scholar |

|

|

My work focuses on scaling modular cognitive architectures of multimodal models, particularly supporting their memory capacity and long-range behaviors. I specialize in optimizing training pipelines and accelerating RL systems for complex multimodal and agentic LLM training. |

|

[Aug 21, 2025] Three papers accepted to EMNLP 2025. Thanks to my excellent collaborators. See you in Suzhou!

[Jun 2025] Started as a research intern at Google DeepMind, Mountain View, CA. Excited to meet friends in the Bay Area!

[May 15, 2025] Four papers accepted at ACL 2025 (one selected as oral presentation). Thanks to my excellent collaborators. See you in Vienna!

[May 01, 2025] Spotlight at ICML 2025 (Top 2.59%). Thanks to my excellent collaborators. Happy to revisit Vancouver!

[Apr 23, 2025] Released audio-long-form-reasoner on GitHub. First to implement Qwen2.5-Omni and vLLM for faster RL reasoning across audio and other unified modalities.

[Jan 22, 2025] Three papers accepted to NAACL 2025. Thanks to my excellent collaborators. See you in Albuquerque!

|

|

★ Core Contributions — Key papers about my work on RL training and multimodal LLM reasoning. Full publication list (30+ papers) |

|

Chunhui Zhang, Zhongyu Ouyang, Kwonjoon Lee, Nakul Agarwal, Sean Dae Houlihan, Soroush Vosoughi, Shao-Yuan Lo ICML 2025 – Spotlight (Top 2.59%) Code / Paper First scalable solution for multi-step Theory-of-Mind reasoning. Uses Bayesian inverse planning for global planning, then lets LLMs focus on local reasoning. Works on 70B+ models where others fail. |

|

Chunhui Zhang, Sirui Wang, Zhongyu Ouyang, Xiangchi Yuan, Soroush Vosoughi ACL 2025 – Oral Presentation (Top 3.24%) Code / Paper Post-trained reasoning LLMs (across 3B, 8B, 70B) on MCTS-sampled data from physical simulators. |

|

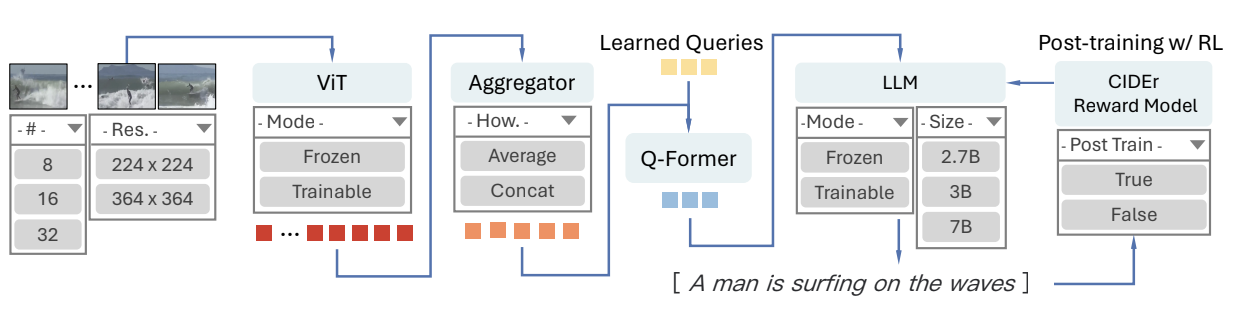

Chunhui Zhang*, Yiren Jian*, Zhongyu Ouyang, Soroush Vosoughi NAACL 2025 Main Conference – Oral Presentation (Top 2.88%) Code / Paper / Slides RL post-training recipe that achieved Top-2 on PapersWithCode video captioning leaderboard, outperforming industry MLLM video captioners. |

|

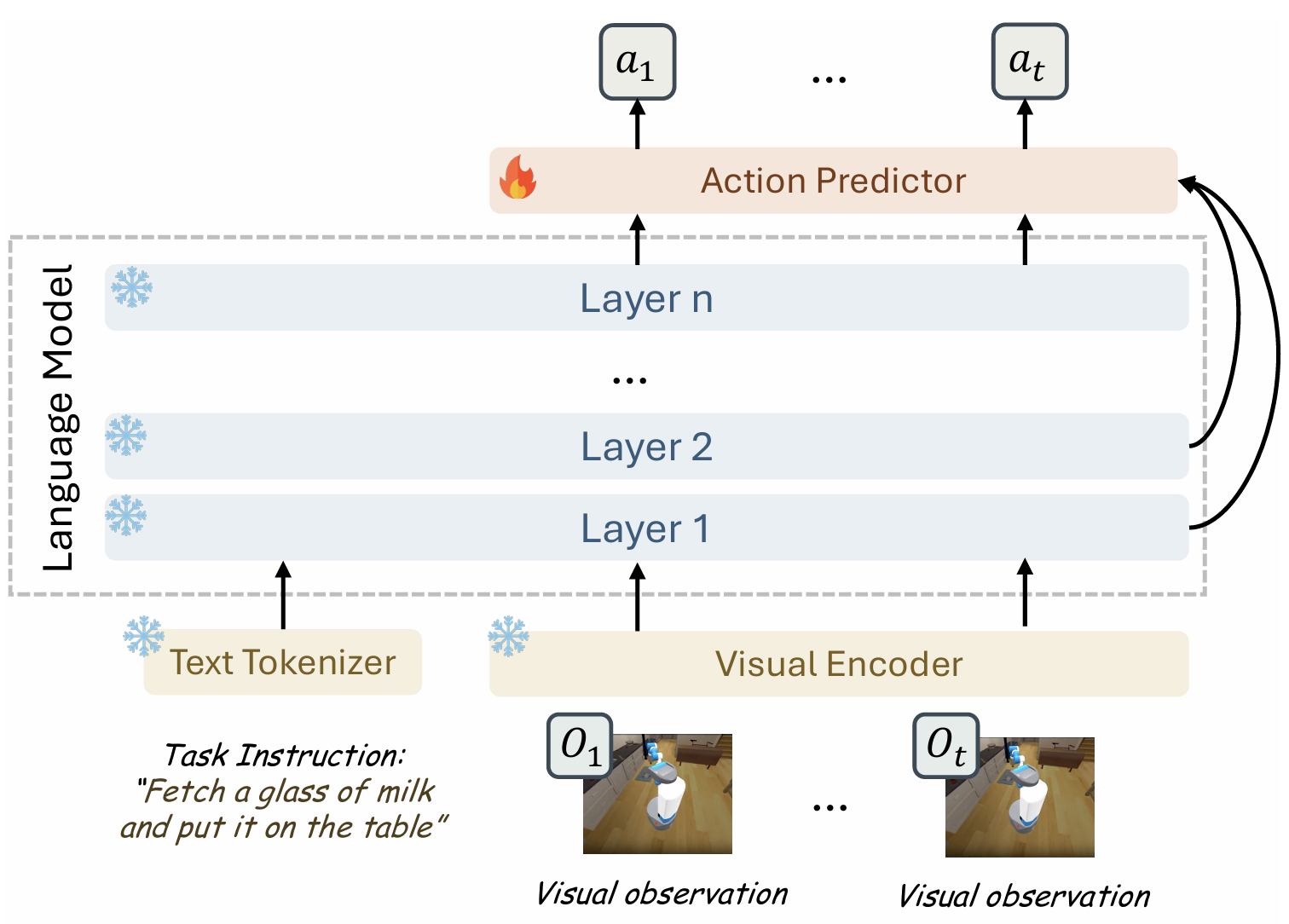

Chunhui Zhang, Zhongyu Ouyang, Xingjian Diao, Zheyuan Liu, Soroush Vosoughi EMNLP 2025 Findings Paper Refines vision-language-action LLM representations to enable more effective PPO-based RL training in embodied AI. |

|

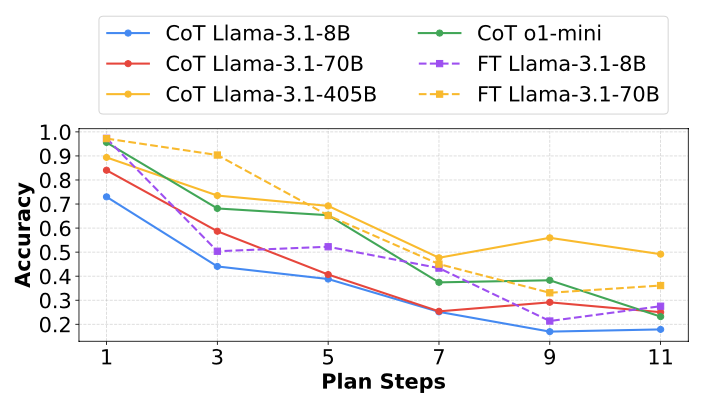

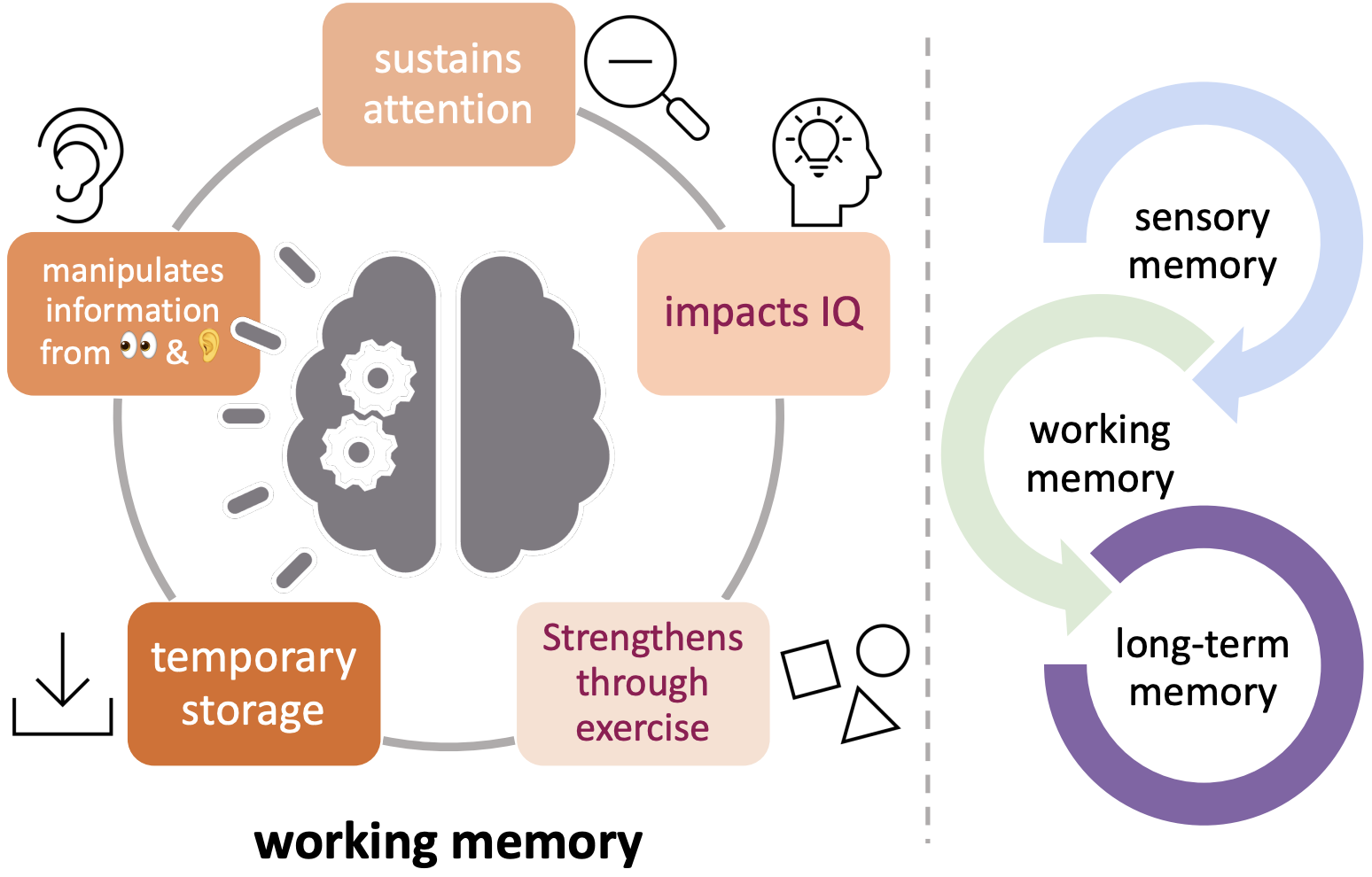

Chunhui Zhang, Yiren Jian, Zhongyu Ouyang, Soroush Vosoughi EMNLP 2024 Code / Paper Introduced working memory as a diagnostic tool for LLM reasoning limits. This work inspired a follow-up NAACL paper on long-context multimodal understanding. |

|

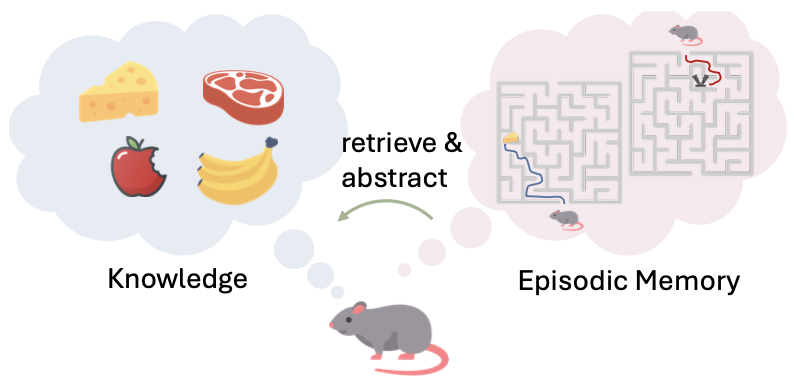

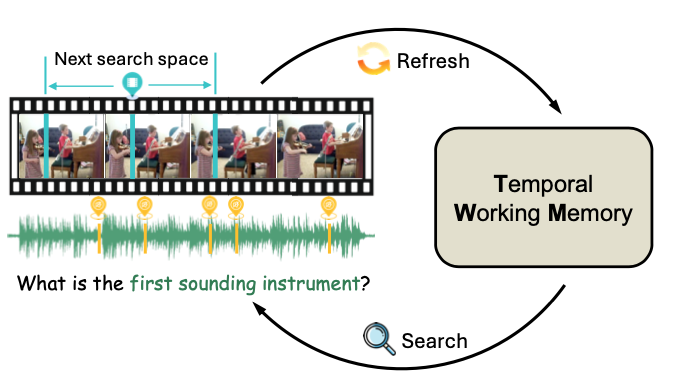

Xingjian Diao*, Chunhui Zhang*, Weiyi Wu, Zhongyu Ouyang, Peijun Qing, Ming Cheng, Soroush Vosoughi, Jiang Gui NAACL 2025 Findings Code / Paper Inspired by working memory in my EMNLP 2024 paper, this work is a follow-up study on long-context video-language understanding. |

|

Projects where I contributed as co-author. View all publications → |

|

Xingjian Diao, Chunhui Zhang, Keyi Kong, Weiyi Wu, Chiyu Ma, Zhongyu Ouyang, Peijun Qing, Soroush Vosoughi, Jiang Gui EMNLP 2025 – Oral Presentation Paper |

|

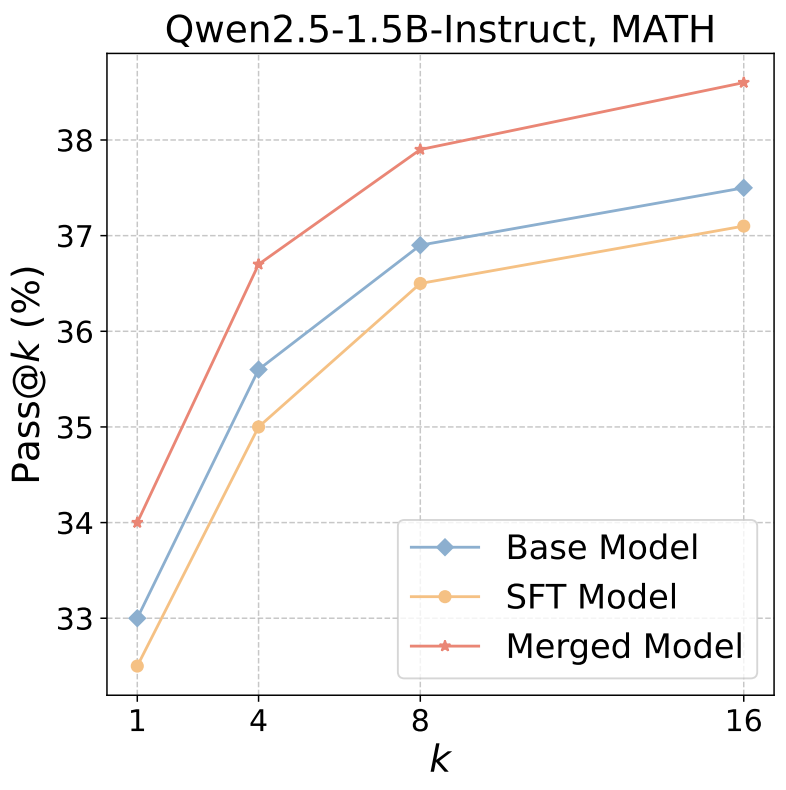

Xiangchi Yuan, Chunhui Zhang, Zheyuan Liu, Dachuan Shi, Soroush Vosoughi, Wenke Lee EMNLP 2025 Code / Paper |

|

Yiren Jian, Tingkai Liu, Yunzhe Tao, Chunhui Zhang, Soroush Vosoughi, Hongxia Yang ACL 2024 – Oral Presentation (Top 3.10%) Code / Paper |

|

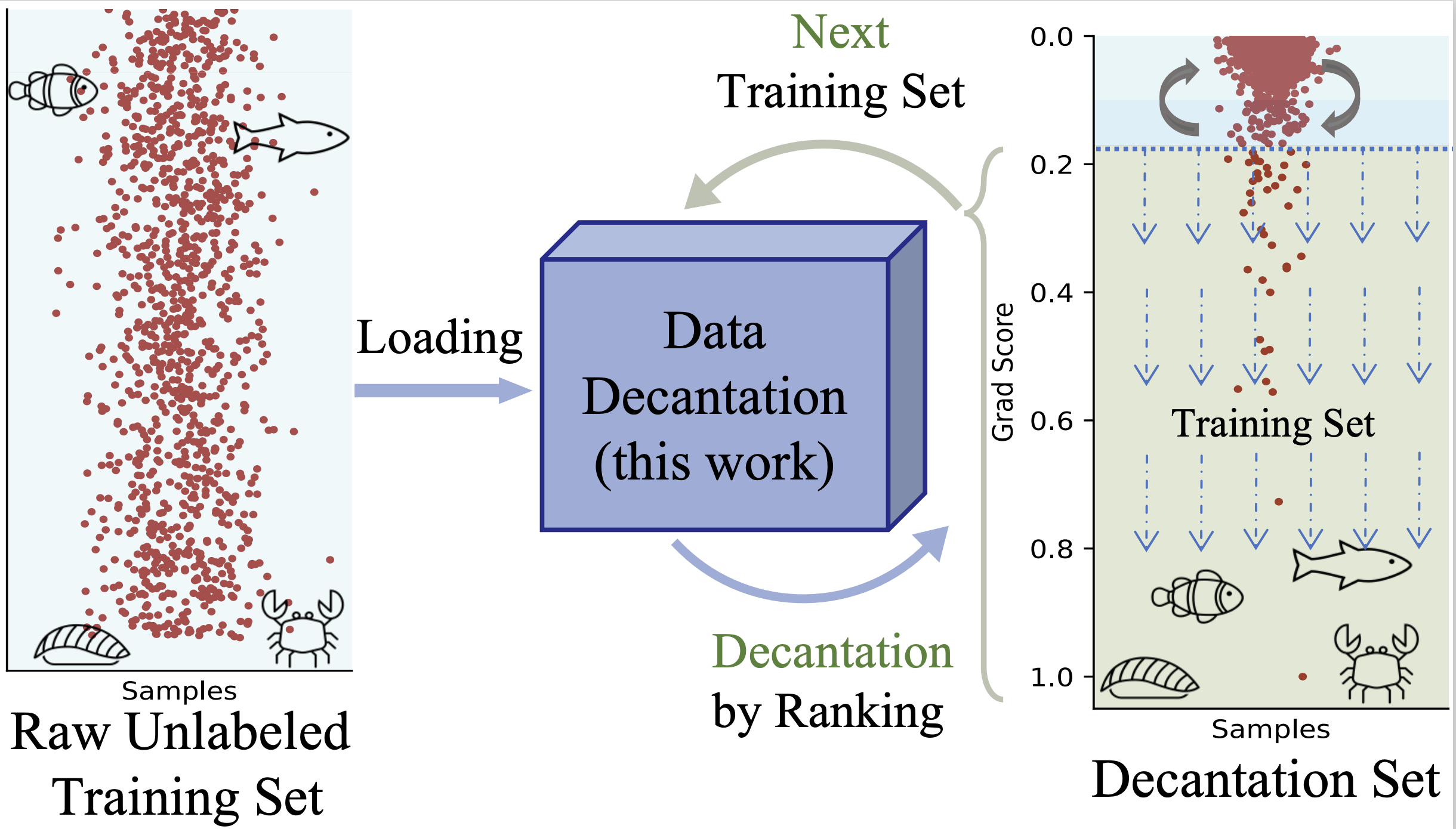

Chunhui Zhang, Chao Huang, Yijun Tian, Qianlong Wen, Zhongyu Ouyang, Youhuan Li, Yanfang Ye, et al. ICML 2023 – AAAI-DCAA 2023 Best Paper Runner-up Award Paper |

|

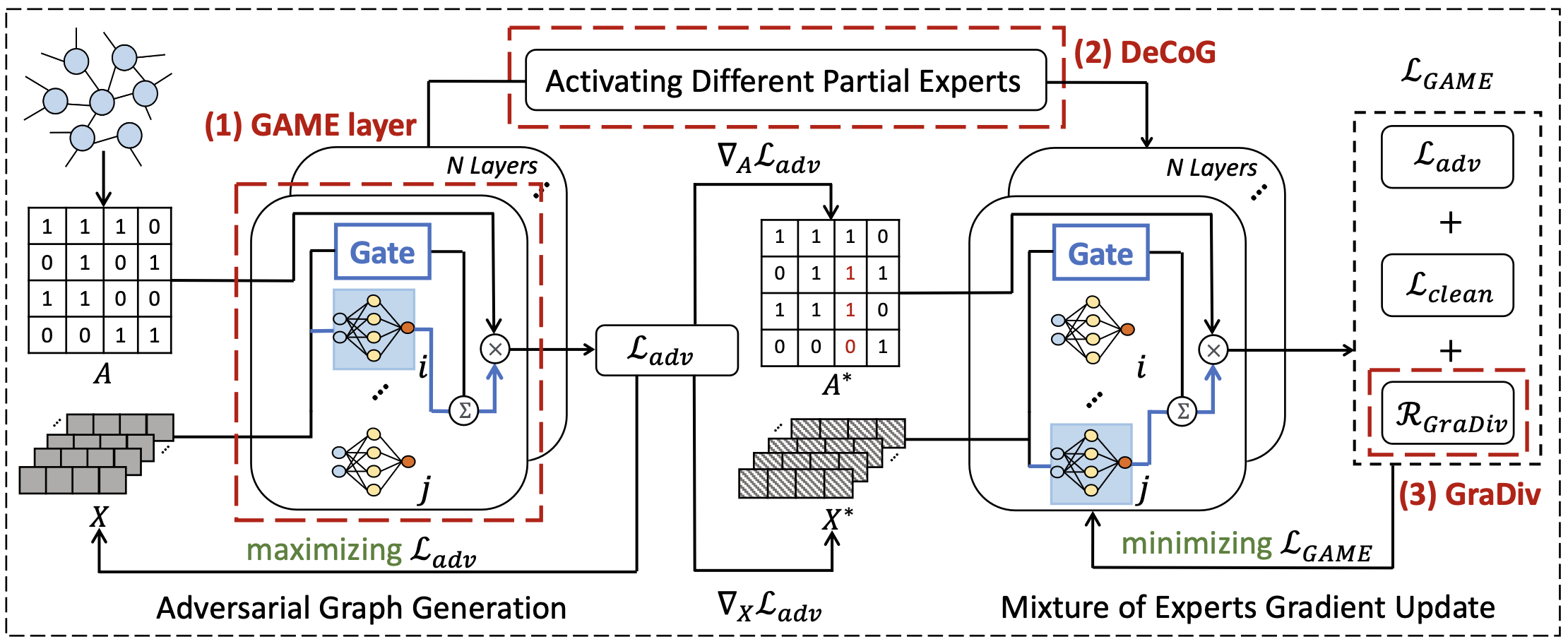

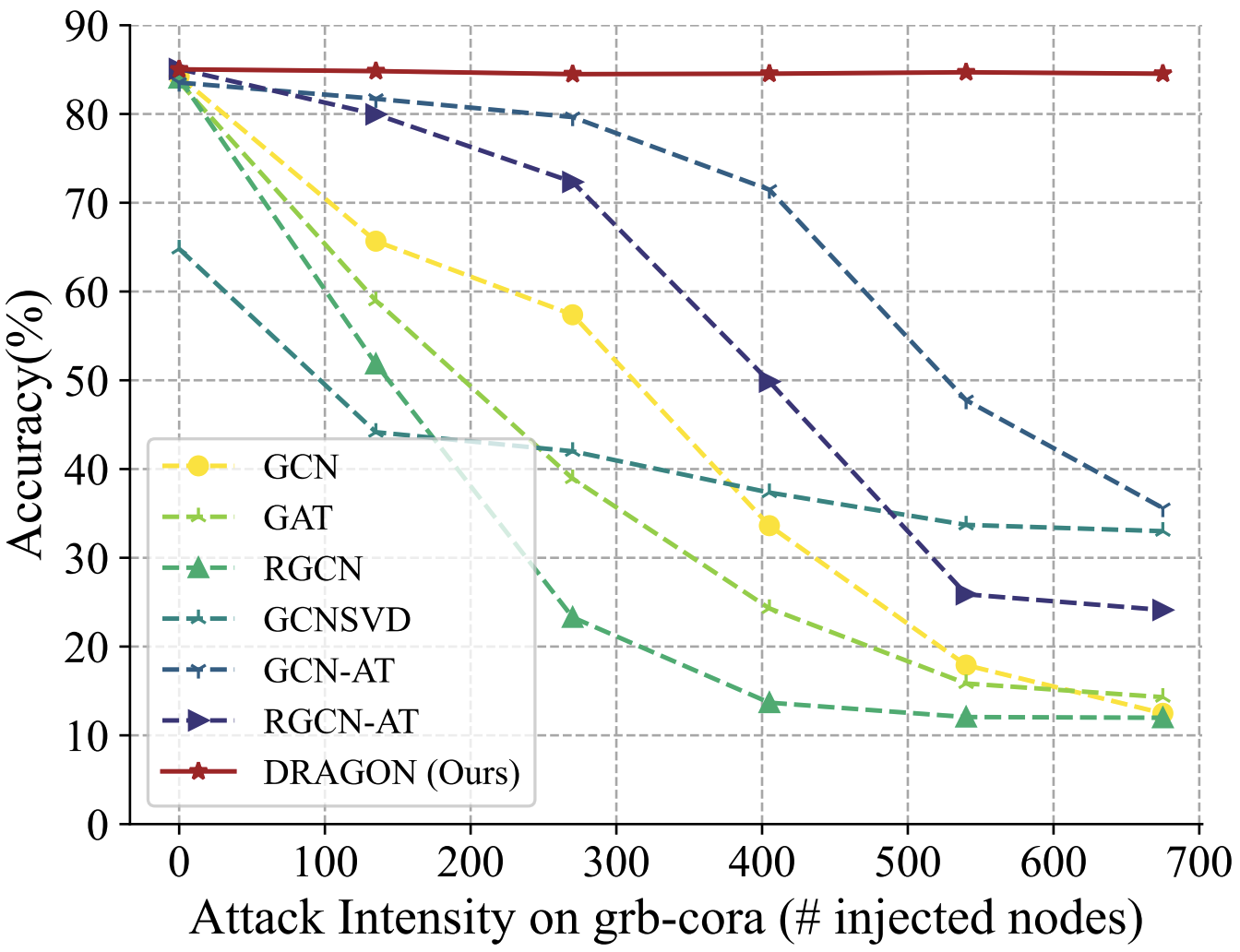

Chunhui Zhang, Yijun Tian, Mingxuan Ju, Zheyuan Liu, Yanfang Ye, Nitesh Chawla, et al. ICLR 2023 Code / Paper |

|

Yaning Jia*, Chunhui Zhang*, Soroush Vosoughi ICLR 2024 (*co-first author) Code / Paper |

|

Xiangchi Yuan*, Chunhui Zhang*, Yijun Tian, Yanfang Ye, et al. ICLR 2024 (*co-first author) Code / Paper |

|

Feel free to drop me an email if you're up for a laid-back chat about life, career, or research. I'm dedicating (at least) 30 minutes each week for these discussions, and I'm particularly eager to connect with students from underrepresented backgrounds or those dealing with challenges or inequity. Just reach out—I'm here to listen! |

|

Racing—a happy part of my life. I particularly enjoy go-karting and circuit racing (some fun facts: 1st and 2nd place at Supercharged). But there is one type of racing that I have yet to try—my favorite rally driving (My favorite rally driver is Han Han). |

|

Design and source code from this cool guy.

|